Jupyter + Pandas = Excel on steroids

Exploratory data analysis and cleaning data is one of the most important aspects of any machine learning task. One cannot get the best results out of a model without properly understanding the data that your work with. Thus I decided to start my learn machine learning journey by getting to know Pandas and Jupyter. Little did I know what an impact it would have on my daily work, even if not working directly with data science tasks. In this article I will introduce you to Pandas and Jupyter and how to get started using them. I will tell about its benefits and how it has partially replaced the work with Excel and improved my efficiency. It’s not the best fit for all of your tasks so I will also bring up scenarios where you’re probably better off using other tools.

About Jupyter and Pandas

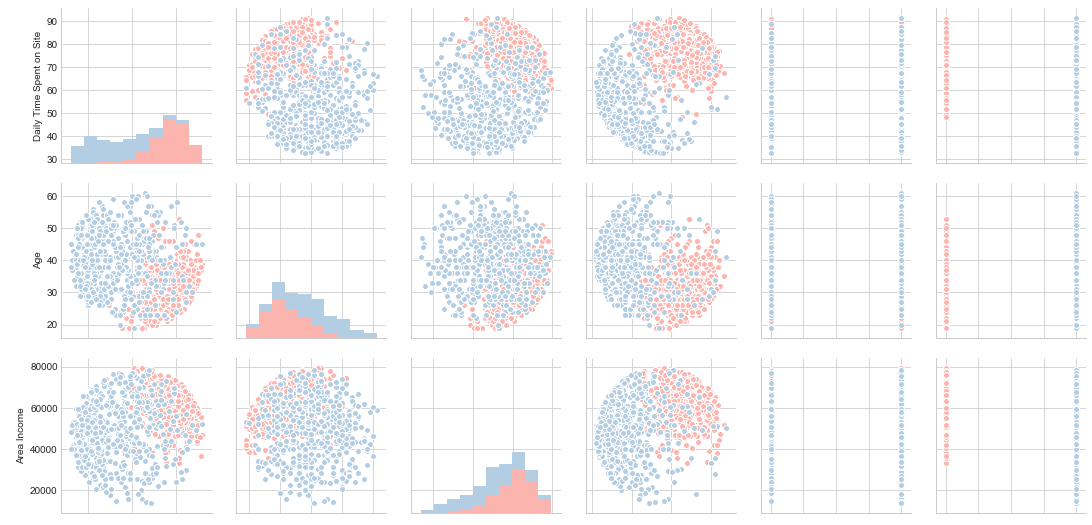

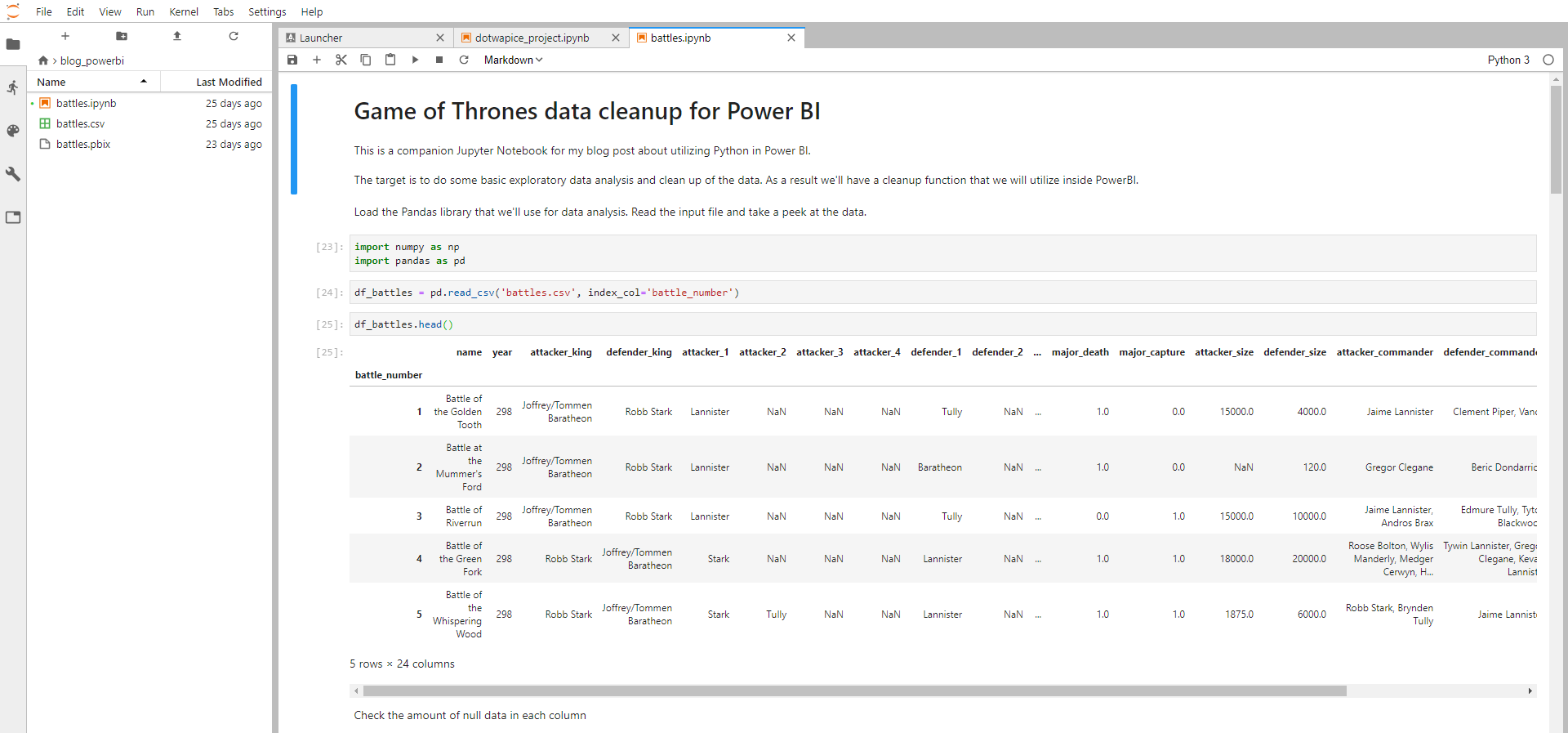

Jupyter is an interactive web application that allows you to run and visualize live code (Python in my case) instead of running it as an script or application. It also supports adding narrative text, which you to create reports within the tool itself. Pandas is an easy-to-use data structures and data analysis library. Combined these tools provide possibilities for data cleaning and transformation, data analysis, data visualization, and simple statistical modeling. The true power of Jupyter unfolds because you have the possibility to utilize all the libraries available for Python. Seaborn for statistical visualization, scikit-learn for machine learning, keras for deep learning to name a few.

Benefits & Drawbacks

I have used Jupyter + Pandas in many occasions, such as; analysis of hourly data for budgetary follow-up and invoicing, helping locate inactive mobile subscriptions, clean-up of various data before passing it onward as an Excel workbook, analysis of survey results, and analysis of bug tracker data. Here are some of the benefits I’ve come across:

- Python ecosystem - Since Python is a programming language you have almost endless possibilities with what you can do with data. Re-use of code, utilize 3rd party libraries, automate work recurring tasks after analysis in Jupyter etc.

- Importing data from more unconventional formats - Although Excel provides basic possibilities for importing tables from web pages it does not come near to the possibilities provided by web scraping libraries or importing a table directly from a PDF document.

- Efficient filtering, combining and aggregation of data - Pandas allows you to do database-like actions such as joining, grouping and querying. In addition, it has pivot functionality similarly to Excel.

- Quick prototyping and visualization - Unless I work with simple data I often reach the desired results quicker using Pandas than with Excel. This although I consider myself a quite seasoned user of Excel.

While there are lots of benefits, there are also occasions when this might not be the best option for you. The main reason being a steep learning curve unless you have some kind of background in programming. I wouldn’t recommend anyone to learn Python and Pandas for the sake of replacing Excel. Use Excel if you’re working with simple data. While the Python ecosystem allows for great customization regarding graphs and other visual components you’re probably better off sticking to Excel if the wanted type of visualization and customization is available.

How to get started

There are several ways to install Python & Pandas on your computer. Probably the quickest way to get up and running if you’re starting from scratch is to install the Anaconda Distribution, which provides everything you need with one installer. You can install the libraries using pip in case you already have the Python environment installed on your computer. Open a command prompt/terminal and execute:

pip install pandas jupyter xlrd openpyxl

xlrd and openpyxl libraries are required in order to read and write Excel

workbooks from Pandas.

Installation instructions: Anaconda, Python, Pandas, Jupyter.

You can start Jupyter once you have installed the needed packages by double-clicking the Jupyter Notebook icon installed by Anaconda or by executing the following command:

jupyter notebook

Starting Jupyter should automatically open up the application in your default

web browser. In case this doesn’t happen try navigating to

http://localhost:8888, which is the default address when running Jupyter on

your own computer. The next step is to get acquainted with Jupyter and

Pandas. The internet is full of good resources, here are some to get you

started:

- Jupyter Notebook Tutorial: The Definitive Guide by Datacamp

- Jupyter Notebook for Beginners: A Tutorial by Dataquest

- 10 Minutes to pandas , by Pandas

- Cookbook , by Pandas

- Data Analysis with Python and Pandas video tutorial by Pythonprogramming

- Data Analysis with Pandas and Python on Udemy by Boris Pashkaver

Personally I have experience from the course on Udemy, which provided an comprehensive overview of what you can do with Pandas. Especially in the beginning, I often relied on the notebooks created during the course when working on real world problems. Hope this has gotten you excited to give Pandas a try and let me know how you utilize Pandas and/or Jupyter in your work!